You are looking to hire an outdoor education contractor to provide experiences for the students at the prestigious K-12 international school where you work.

You are considering three potential suppliers.

Each has provided you with safety information, including the number of incidents significant enough to require recording under health and safety record-keeping regulations. (This is the Total Recordable Incident Rate, or TRIR, expressed as the number of recordable incidents per 200,000 work hours.)

- Organization 1

- A start-up outdoor education provider in its first year of operation.

- It had a recordable incident in its first 2,000 work hours.

- It has, then, a TRIR of 100 per 200,000 work hours.

- Organization 2

- A well-established outdoor education provider with several campuses, and which runs expeditions in multiple countries.

- It has had five recordable incidents over 800,000 work hours in the past year.

- Its yearly TRIR is 1.3 per 200,000 work hours.

- Organization 3

- A large multi-national corporate entity with branches in multiple countries across multiple continents.

- It has 18 recordable incidents over 4 million work hours in the past year.

- Its yearly TRIR is 0.9 per 200,000 work hours.

Using TRIR to Measure Safety Performance is Almost Always Statistically Invalid

Based on the safety information you have, you might think that Organization 3 is the best. Its Total Recordable Incident Rate is 0.9, which is lower than 1.3 (for Organization 2), and far less than 100 (for Organization 1).

But using TRIR as a measure of safety performance is almost always statistically invalid.

An understanding of probability and statistics tells us that Total Recordable Incident Rate is generally not predictive of safety performance.

It is therefore generally inappropriate to use Total Recordable Incident Rate to evaluate safety performance. This includes evaluating the safety performance of an organization, business unit, person, group of people, safety system, or change to a safety system.

For TRIR to be a statistically valid measure, it should be reported as a range—not as a single number. The range (or ‘confidence interval’) should be sufficiently narrow to have practical usefulness. This is generally possible only when evaluating millions of work hours over multiple years.

Let’s explore why this is the case.

Total Recordable Incident Rate

In many jurisdictions, health and safety authorities require companies to document significant safety incidents.

For example, in the USA, the Occupational Safety and Health Administration criteria for a recordable injury or illness includes any work-related:

- Injury or illness that results in loss of consciousness, days away from work, restricted work, or transfer to another job

- Injury or illness requiring medical treatment beyond first aid

- Fatality

Since the number of recordable incidents per hour of work typically is a tiny fraction (like .0000065), TRIR is usually expressed as a rate per 200,000 work hours, to provide a more easy-to-use number (like 1.3).

(Since 200,000 work hours is the number of hours 100 employees will typically work in one year (2,000 hours per person x 100 people), TRIR can illustrate the percentage of workers who experience a reportable incident in one year. A TRIR of 4, for instance, shows that, assuming no worker experienced more than one incident, four percent of workers experienced a reportable event in that year.)

TRIR has been used as an indicator of safety performance for decades. It has been used to indicate how successful a safety program is, to contrast companies and potential contractors against others, and to assess the job performance of individual employees and teams.

TRIR is also known as TRIF or TRIFR, Total Recordable Incident Frequency/Rate.

But TRIR is generally not a useful measure of safety performance, due to the unpredictable nature of incident occurrence.

In the example above, Organization 1 had an incident before it accumulated many work hours, leading to a very high incident rate on a per-work-hour basis. But this doesn’t mean that it will have another incident any time soon.

TRIR is only effective in predicting future safety outcomes when looking at millions of work hours over 100 or more months.

Confidence Intervals, or “Margin of Error”

Let’s look more closely at our three organizations, and their Total Recordable Incident Rate.

We’ve expressed TRIR as a single number: the number of incidents per 200,000 work hours, over a preceding time period (such as a year).

For instance, with Organization 2 described above, there were five recordable incidents over 800,000 work hours, resulting in a TRIR of 1.3. But it’s unlikely that there will be exactly five incidents in the next 800,000 work hours, resulting again in a TRIR of 1.3.

TRIR expressed as a single number isn’t very helpful to us, as it’s not predictive of future performance, in the absence of millions of work hours to assess.

We can get some additional information about the organization’s safety record by looking at the margin of error, or what statisticians call the confidence interval, of the TRIR results.

A confidence interval is the probability that a value will lie between an upper and lower value.

For example, one could express TRIR as a value in a range between two other values, X and Y, with 95 percent confidence.

Another way to say this is that there’s only a five percent probability that the true value will be outside the range between X and Y.

How is this confidence interval determined?

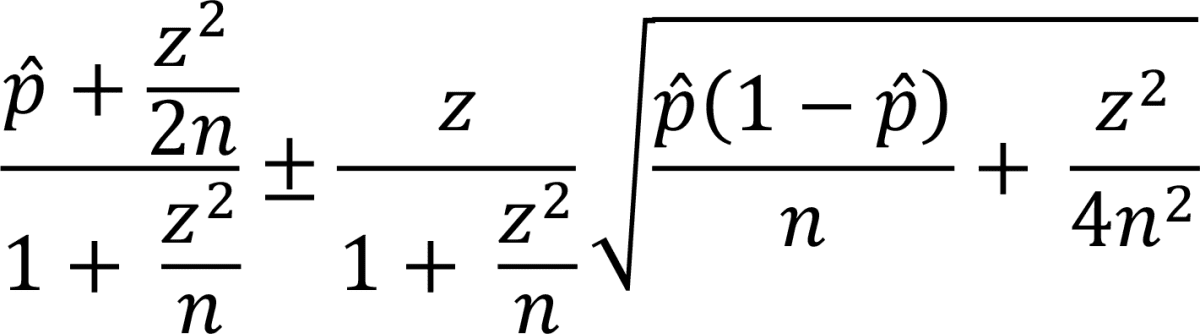

We can determine the 95 percent confidence interval with the following Wilson confidence interval expression:

where p̂ is the number of incidents per 200,000 work hours, n is the number of work hours, and z is the value of a standard normal distribution for the target confidence interval—for a 95 percent confidence interval, a value of 1.96.

This gives the following results:

- Organization 1

- One recordable incident in its first 2,000 work hours

- TRIR: 100

- TRIR range: 17.65 to 565.39 per 200,000 work hours

- Organization 2

- Five recordable incidents over 800,000 work hours

- TRIR: 1.3

- TRIR range: 0.53 to 2.93 injuries per 200,000 work hours

- Organization 3

- 18 recordable incidents over 4 million work hours

- TRIR: 0.9

- TRIR range: 0.57 to 1.42 injuries per 200,000 work hours

Confidence Interval: Implications for Organization 1

Organization 1’s true incident rate for the first 2,000 work hours was 100 incidents per 200,000 work hours.

Our analysis shows us that for Organization 1, we can predict with approximately 95 percent accuracy that the future TRIR will be between 18 and 565.

This, however, is not useful information. The range of 18 incidents per 200,000 work hours to 565 incidents over 200,000 work hours is so broad that we can’t reasonably draw any useful conclusions from this data.

Confidence Interval: Implications for Organizations 2 and 3

We can predict with 95 percent accuracy that Organization 2 will have a TRIR of 0.53 to 2.93.

And we can predict with 95 percent accuracy that Organization 3 will have a TRIR of 0.57 to 1.42.

Notice that the range for Organization 3 is completely within the range for Organization 2.

The ranges wholly overlap. This indicates that the incident records are statistically indistinguishable.

We now can understand that we simply don’t have enough data to make meaningful conclusions about the likely future safety performance of Organization 1.

And statistical analysis shows us that there is no discernable difference between the predicted future safety performance of Organization 2 and Organization 3.

This, then, helps us understand the statistical invalidity of using TRIR as a predictor of safety outcomes.

Incident occurrence, in the words of one safety researcher, “may exhibit periods of high frequency, interspersed with periods of what appears to be good safety performance with few events.”

But these unpredictable periods of fluctuation, the researcher continued, “can, in some cases, lead an organisation to an erroneous assessment of the true state of its safety performance.”

TRIR: Random and Unpredictable

Many factors influence safety outcomes, and we can’t be aware of and understand all the relevant factors and how they interact.

A Monte Carlo simulation conducted by safety researchers indicated that TRIR is 96 to 98 percent random. This means that predictions of TRIR were only accurate two to four percent of the time. “TRIR is almost entirely random,” the researchers noted.

This reflects the complex nature of incident causation.

Due to unpredictable variation in safety performance, tests showed that at least 100 months of data were needed to achieve reasonably accurate predictions.

Most workplace safety analyses use fewer than 100 months of data, leading the researchers to state, “for all practical purposes, TRIR is not predictive.”

The researchers concluded, “Unless hundreds of millions of work hours are amassed, the confidence bands are so wide that TRIR cannot be accurately reported…the implication is that the TRIR, for almost all companies, is virtually meaningless.”

Problems with All Lag Measures

The inability to get useful information from TRIR/TRIFR applies to similar lag measures, or lagging indicators.

This includes Lost Workday Incident (LWDI) rate and Lost Time Injury Frequency Rate (LTIFR).

This led a health and safety governance report, whose development was funded by Aotearoa New Zealand’s workplace health and safety regulator, Mahi Haumaru Aotearoa WorkSafe, to conclude, “LTIFR and TRIFR are not an indicator of how healthy and safe work is in an organisation.”

The 2023 report continued, “The evidence is that fluctuations in TRIFR rates are statistical noise, and that there is no relationship between TRIFR and risk of serious and fatal accidents.”

The report stated that monitoring TRIFR and LTIFR can cause harm, due to an “artificial and incorrect sense of accuracy,” and recommended that lag indicators not be used as health and safety performance measures.

TRIR Not Associated with Fatality Occurrence

Researchers in occupational safety who pointed out the aforementioned problems with using TRIR to measure safety performance also noted that research shows that changes in TRIR are not associated statistically with fatality rates.

“Fatalities appear to follow different patterns,” the researchers stated, suggesting that fatal incidents occur for different reasons than do generic reportable incidents.

This indicates that the idea that injuries of different levels of magnitude occur at set ratios (the controversial “Heinrich safety pyramid” or “accident triangle”) may not be correct.

The research also suggests it is incorrect to hold that reducing the rate of total recordable injuries reduces the risk of high-severity incidents.

When Not To Use TRIR

TRIR and similar lagging indicators, the research indicates, are not useful in evaluating the following:

- Organizations, such as businesses providing outdoor, travel or adventure activities, in comparing a company against its competitors

- Potential contractors (vendors, suppliers)

- Business units or projects

- Individual persons, or teams of people

- Adequacy of safety systems

- Effectiveness of changes to safety systems—that is, whether initiatives to improve risk management are effective

One safety research group noted, “If an organization uses TRIR for performance evaluations, it is likely rewarding nothing more than random variation.”

Signs at a company entrance proclaiming “X person-hours without a lost-time incident” are also not helpful, from the perspective of statistical cogency. While signs do have a role in promoting a positive culture of safety, communications other than lag indicator reporting are likely to be more effective in improving safety-related values, beliefs, and behaviors.

Additional Weaknesses in TRIR

Focusing on documenting recordable incidents has been criticized for not distinguishing between more minor incidents, such as a cut requiring stitches, and critical incidents such as a fatality.

TRIR also fails to record “near misses,” or mishaps that almost occurred but which were narrowly avoided. These near misses can offer valuable learning opportunities for improving safety, before a catastrophe occurs.

Reportable incidents tend to focus on rapidly-occurring (acute-onset) injury, such as fractures or bleeding from a fall. But TRIR generally doesn’t consider long-term health and safety effects, such as skin cancer arising after years or decades of exposure to the sun, or cardiovascular disease resulting from years of exposure to a psychologically stressful work environment.

TRIR simply provides a number, or a range, and does not indicate where safety problems are, or what should be done about them.

Using Incident Rates

In some circumstances, using incident rates can be useful.

TRIR is more usefully expressed not as single number but as a range, with a suitably narrow confidence interval. This is possible if large datasets are available.

When using millions of work hours over 100 or more months, TRIR can be used to understand incident rates across industries.

For example, legislators and policy-makers can apply useful TRIR data to establish strategy and policy for prioritizing regulatory action, or to set funding priorities for governmental investments in health and safety. Regulators may use large TRIR datasets to set worker’s compensation insurance rates.

These applications are unlikely to be of use to providers of outdoor, travel, experiential and adventure programs.

However, managers of trips, excursions and adventurous activities can look through the details of incident data to identify trends and patterns that can lead to improvements in risk management.

For example, an increased rate of heat illness on hiking trips might motivate an administrator to change activity procedures to avoid hiking in the hottest part of the day, encourage hydration, promote wearing of protective clothing, or change which areas are used for vigorous outdoor activities during the hottest times of the year.

The Futility of Asking Why

Outdoor, travel and adventure programs exist in a complex sociotechnical system of people and things. In such a complex system, it can be impossible to identify all the individual risks that might lead to an incident. It is similarly impossible to predict which risk factors might come together, at one time, to bring about a mishap.

When an incident occurs, it may not be possible to identify all the elements that led to the incident, and which contributed most to the incident’s causation.

Consequently, when an incident happens, it may be less helpful to ask “Why did this incident occur?” (which can be unknowable), or “Could this incident have been prevented?” (the answer, in hindsight, is virtually always yes).

Instead, it may be more useful to ask, “What steps can we take to help prevent the occurrence of similar incidents in the future?”

This helps us avoid trying to solve yesterday’s problems. It allows us to focus on identifying what can be done to build resilient, multi-layered and effective safety systems to reduce the probability and magnitude of any future incidents.

Evaluating Safety Systems

If Total Recordable Incident Rate is a largely ineffective tool for assessing the effectiveness of risk management measures, what can be used to identify strengths and areas of improvement in an organization’s safety systems?

In general, an appropriate approach to safety is to reduce risks so far as is reasonably practicable (SFAIRP).

This means identifying specific risks that might exist for an organization, and implementing policies, procedures, values and systems to keep those risks as low as reasonably practicable.

And it means using all suitable risk management instruments to reduce risk across an entire organization. These broad-based tools include risk transfer, such as insurance policies and liability waivers; rehearsed emergency plans; detailed incident reporting and analysis; formal review of major incidents; a risk management committee; screening of staff and participants for medical suitability; formal risk management reviews or safety audits; skill in news media relations; suitable documentation of procedures and actions, and considering relevant accreditation schemes.

Organizations can seek to identify and meet safety and quality standards in the educational travel, experiential adventure, outdoor recreation or other relevant industries. These standards include:

- Activity Safety Guidelines published by the New Zealand adventure sector

- Good Practice Guides published by the Australian adventure sector

- Adventure Safety Accreditation standards from Viristar, and other accreditation standards

And organizations should take reasonable steps to understand and comply with laws and regulations relevant to their circumstances.

Note that these steps are not retrospective—these don’t involve looking backwards at past performance. Instead, their focus is on proactively identifying steps that can be taken to reduce the likelihood and severity of potential future incidents.

Conclusion

It was the beginning of the summer, and the director of the outdoor adventure program addressed the staff of wilderness educators assembled for start-of-season training.

The director gave their safety talk, proudly proclaiming that the organization’s lost-day cases had dropped the last season to two, from four lost-day cases the year before. “You’re doing a great job,” they said. “This shows a real improvement—keep up the good work!”

One of the field staff, who happened to have a mathematics degree from Princeton University, muttered audibly, “that’s statistically invalid!”

We can use a basic understanding of statistics and probability to avoid drawing inaccurate conclusions from safety data.

For the independent school coordinator seeking to identify a third-party provider to deliver outdoor adventure learning experiences to the school’s students, it is now clear that the Total Recordable Incident Data does not provide sufficient information to evaluate the likely safety performance of each potential contractor.

Instead, a thorough contractor evaluation—investigating items such as safety procedures, relevant experience, staff qualifications, equipment management, insurance coverage, inspection results, accreditation status, professional references, suitability of physical facilities, and emergency plans—can help illuminate whether the potential supplier’s risk management practices are suitable.

Outdoor and adventure professionals interested in learning more about safety standards and good practice for school excursions, camps, treks, adventure tourism and outdoor education programs can continue their professional development and learn essential safety skills through Viristar’s 40-hour training course, Risk Management for Outdoor Programs.